Why We Love the NVIDIA TX2 – Embedded AI Specs

The NVIDIA TX2, with its advanced graphics processing unit (GPU) capabilities, has immense computational power. It enables efficient machine learning in detail, which is why we chose it as the foundation for Boulder AI’s DNNCam and use it in other applications, too.

Deep Learning and Artificial Intelligence

In recent years, amazing advancement in machine learning hardware have made it possible for developers to build small, self-contained AI devices. One of these advancements is the NVIDIA TX2, which offers:

Support for FP16 as well as INT8 data types speeds up AI algorithms that require a lot of floating point calculations.

Twice the speed and energy-efficiency of its’ predecessor, enabling users to deploy AI algorithms based on Deep Neural Networks directly at the source of data rather than moving all the data to the cloud and processing it on a remote server. This minimizes latency.

Various software options enable developers to quickly deploy machine learning algorithms on the TX2.

More on NVIDIA TX2 Software

CUDA has been the frontrunner in the field of AI for accelerating algorithms. In fact, it is the backbone of many other libraries.

The recently released Jetpack 3.1 allows users to download and use TensorRT 2.1 as well as cuDNN v6.0. Both of these software packages enhance the deep learning performance on the TX2.

TensorRT is useful for optimizing an existing DNN by using cuDNN primitives and reducing memory bandwidth demands of the network graph to generate faster inference results. In short, it makes the most efficient use of GPU hardware possible by auto-tuning the neural network for the specific hardware platform.

cuDNN is a library provided by NVIDIA based on CUDA. It contains finely-tuned implementations of most of the commonly used primitives in deep neural networks. This allows the AI developer to spend more time in implementing more features rather than debugging performance related issues.

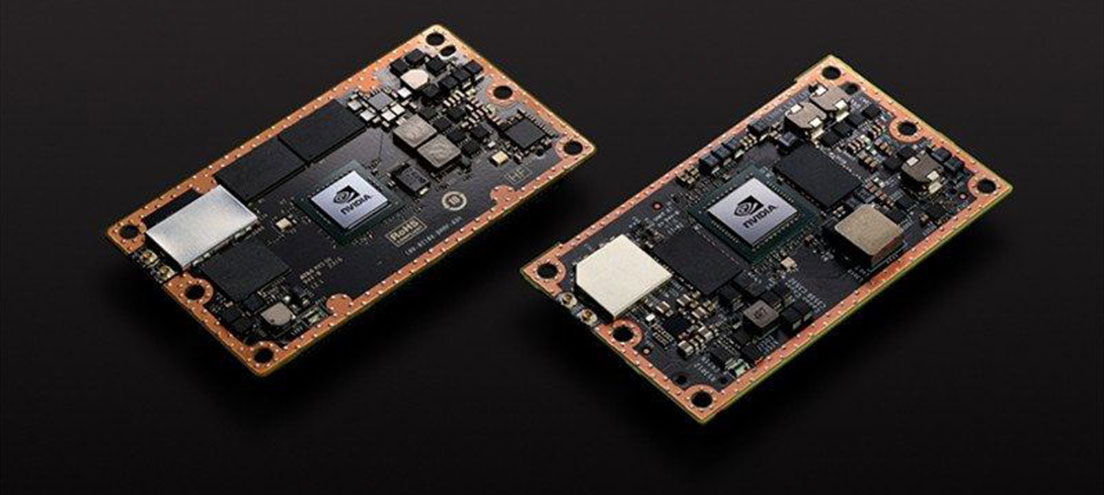

NVIDIA TX2 Architecture

The NVIDIA TX2 has an integrated Pascal GPU in it with 256 compute or CUDA cores. For context, the 1080 Ti is the highest performance graphics card available in the market right now. This same GPU architecture has been downsized to fit inside a small chip that targets embedded devices.

The Pascal GPU architecture is the most energy efficient GPU architecture designed by NVIDIA. A variety of software libraries and APIs are available to access and program the Pascal GPU present inside the NVIDIA TX2. The most prominent ones are CUDA, OpenGL, Vulkan.

Why We Work With The NVIDIA TX2

With all these features and hardware acceleration support for deep learning, the NVIDIA TX2 is the best platform available to design high-end, compact products leveraging advances in AI technology. That’s why BoulderAI relies on it.

With questions on the technical aspects of our work, please contact us.

Related News

[show_related_news]